Now start Logstash in the foreground so that you can see what is going on. Perhaps nginx* would be better as you use Logstash to work with all kinds of logs and applications.Ĭodec = rubydebug writes the output to stdout so that you can see that is it working.

The goal is to give it some meaningful name. The index line lets you make the index a combination of the words logstash and the date. So using the elastic user is using the super user as a short log. You could also create another user, but then you would have to give that user the authority to create indices.

FILEBEATS PORT PASSWORD

Use the same userid and password that you log into with. So you have to give it the URL and the userid and password. This part is disappointing at ElasticSearch does not let you use the cloud.id and th to connect to ElasticSearch, as does Beats. Instead tech writers all use the same working example. Use the example below as even the examples in the ElasticSearch documentation don’t work.

FILEBEATS PORT PLUS

It basically understands different file formats, plus it can be extended. In order to understand this you would have to understand Grok. Tell logstash to listen to Beats on port 5044 Now edit /usr/share/logstash/logstash-7.1.1/config/nf sudo /usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml The -e tells it to write logs to stdout, so you can see it working and check for errors. # Optional protocol and basic auth credentials. # Enabled ilm (beta) to use index lifecycle management instead daily indices.

FILEBEATS PORT INSTALL

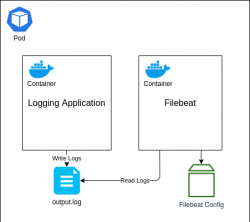

Use the right-hand menu to navigate.) Download and install Beats: wget (This article is part of our ElasticSearch Guide. Logstash is configured to listen to Beat and parse those logs and then send them to ElasticSearch.Beats is configured to watch for new log entries written to /var/logs/nginx*.logs.The answer it Beats will convert the logs to JSON, the format required by ElasticSearch, but it will not parse GET or POST message field to the web server to pull out the URL, operation, location, etc.

FILEBEATS PORT HOW TO

We previously wrote about how to do parse nginx logs using Beats by itself without Logstash. But the instructions for a stand-alone installation are the same, except you don’t need to user a userid and password with a stand-alone installation, in most cases. We also use Elastic Cloud instead of our own local installation of ElasticSearch. We will parse nginx web server logs, as it’s one of the easiest use cases. I even tried to overwrite the template.Here we explain how to send logs to ElasticSearch using Beats (aka File Beats) and Logstash. My other issue is that somehow, the iis-* index has the fields for ALL OTHER INDEXES, metricbeat, winlogbeat, auditbeat, all of the fields in those indexes exist in iis-* and I cant get rid of it. etc were added to my other index patterns like filebeat-* ! Even though these fields made by a grok filter should only apply when type = iis. Now in my elasticsearch data, IIS fields such as CS-URI-Stem, CS-METHOD, S-PORT. Now, I don't want these logs to use the filebeat-* index so I implicitly configured the output index to "iis-*" While adding filebeat on an IIS server, and configuring it solely for IIS, shipping logs to the dedicated logstash input port configured with type=>"iis". Perhaps theres a problem when you have multiple beats inputs with different ports? Now my logstash is configured to accept input on different ports depending on what I'm configuring to ingest. Imagine the network need to run different filebeats on different hosts, some shipping IIS logs, some syslog, and some custom application logs. I ran into an index issue while trying to add a second filebeat instance.

0 kommentar(er)

0 kommentar(er)